How much GPU memory is required for deep learning?

Curious about deep learning and GPU memory? How much GPU memory is needed for optimal performance? Look no further! We’ll unravel the secrets behind GPU memory requirements for deep learning.

Get ready to discover the perfect balance between power and efficiency in your AI projects. So, how much GPU memory do you need? Let’s find out!

Factors Affecting GPU Memory Requirements

Understanding the factors that influence GPU memory requirements is crucial for optimizing deep learning projects. Let’s explore three key factors: dataset size, model complexity, and network architecture.

Dataset Size

When it comes to deep learning, larger datasets demand more GPU memory. As the dataset size increases, so does the memory needed to store and process the data. Handling big datasets with limited GPU memory requires strategic approaches.

To tackle this challenge, data batching is a common technique. Instead of loading the entire dataset into memory at once, it is divided into smaller batches. This allows for efficient memory utilization, as only one batch is processed at a time.

Model Complexity

Complex models require more GPU memory for training due to the increased number of parameters and layers. As models become more intricate, the memory demands grow accordingly. To optimize memory usage, techniques such as model pruning and parameter sharing can be employed.

Model pruning involves removing unnecessary or redundant parameters, reducing the memory footprint without significantly affecting performance.

Network Architecture

Different network architectures have varying GPU memory requirements. Popular architectures like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) have unique memory demands.

CNNs are commonly used for image-related tasks and require more memory due to their convolutional layers. RNNs, on the other hand, are often used for sequential data and have memory requirements influenced by the length of the input sequence.

Optimizing GPU Memory Usage

Efficiently managing GPU memory is essential for maximizing the performance of your deep learning projects. Let’s explore some techniques and strategies to optimize GPU memory usage.

Memory Management Techniques

Memory pooling and data parallelism are two effective techniques to optimize GPU memory usage. Memory pooling involves reusing memory blocks, reducing the need for frequent memory allocations and deallocations. This technique helps prevent memory fragmentation and improves memory utilization.

Data parallelism involves distributing the data across multiple GPUs or GPU cores. By dividing the data and computations, memory requirements can be reduced, enabling efficient processing of large datasets. Data parallelism is particularly useful when working with limited GPU memory.

Memory-efficient Model Design

Designing models with a lower memory footprint is crucial for optimizing GPU memory usage. Techniques like parameter sharing and pruning can help achieve this. Parameter sharing allows multiple parts of a model to share the same set of parameters, reducing memory requirements. Pruning involves removing unnecessary or redundant parameters, further optimizing memory usage without compromising performance.

Data Preprocessing

Data preprocessing plays a crucial role in reducing GPU memory usage. Techniques like data augmentation and compression can help achieve this. Data augmentation generates additional training samples by applying random transformations to the existing data.

By expanding the training dataset, you can reduce the memory requirements per sample.

GPU Memory Recommendations for Popular Deep Learning Tasks

When it comes to deep learning tasks, having the right amount of GPU memory is crucial for optimal performance. Let’s explore the recommended GPU memory ranges for popular tasks like image classification, natural language processing (NLP), and object detection.

Image Classification

For image classification tasks, the required GPU memory can vary depending on the complexity of the model and the size of the input images.

As a general guideline, a GPU with at least 4GB of memory is recommended for basic image classification tasks using popular models like ResNet or VGG. However, for more advanced models like EfficientNet or Inception-ResNet, a GPU with 8GB or more memory is preferable.

Natural Language Processing

In NLP tasks such as language translation and sentiment analysis, the memory requirements can vary based on the complexity and size of the language models. For basic NLP tasks, a GPU with 4GB of memory should suffice. However, for larger language models like BERT or GPT-2, GPUs with 8GB or more memory are recommended.

Object Detection

Object detection tasks involve analyzing and identifying objects within images. The GPU memory requirements for object detection can vary depending on the complexity of the models and the size of the input images.

For basic object detection tasks using popular models like SSD or YOLO, a GPU with 4GB of memory is recommended. However, for more advanced models like Faster R-CNN or Mask R-CNN, a GPU with 8GB or more memory is preferable.

Frequently Asked Questions

Q1: Is GPU memory the same as regular system memory?

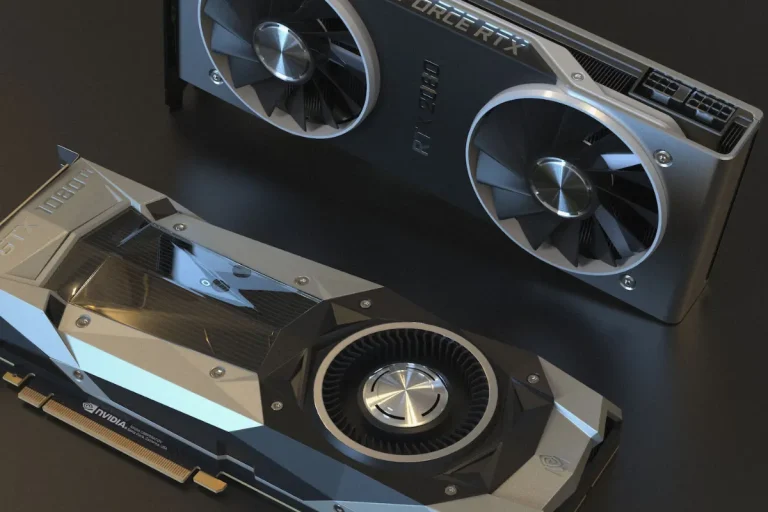

No, GPU memory is separate from the system memory (RAM). It is dedicated memory on the graphics card used specifically for processing and storing data during deep learning tasks.

Q2: Do all deep learning tasks require the same amount of GPU memory?

No, the GPU memory requirements vary depending on the complexity of the deep learning task, the size of the models, and the input data. Different tasks like image classification, NLP, and object detection have different memory requirements.

Q3: Can I use a GPU with less memory than the recommended range?

Using a GPU with less memory than recommended may lead to performance issues or even failure during training. It is advisable to use a GPU with at least the recommended minimum memory to ensure smooth deep-learning operations.

Q4: Can I use a GPU with more memory than the recommended range?

Using a GPU with more memory than the recommended range is generally not an issue. However, it may not necessarily improve performance unless the deep learning task or dataset is particularly large or complex.

Q5: What happens if my GPU runs out of memory during training?

If the GPU runs out of memory during training, it can cause errors and halt the training process. This can be resolved by reducing the batch size, resizing input data, or using memory optimization techniques like gradient checkpointing or model parallelism.

Conclusion

Having the right amount of GPU memory is essential for smooth and efficient deep-learning tasks. The memory requirements vary based on the complexity of the task and the size of the models and datasets.

By following the recommended GPU memory ranges, you can ensure optimal performance and successful deep-learning experiences. Remember, the right memory makes all the difference!